How AI Works: Large Language Models

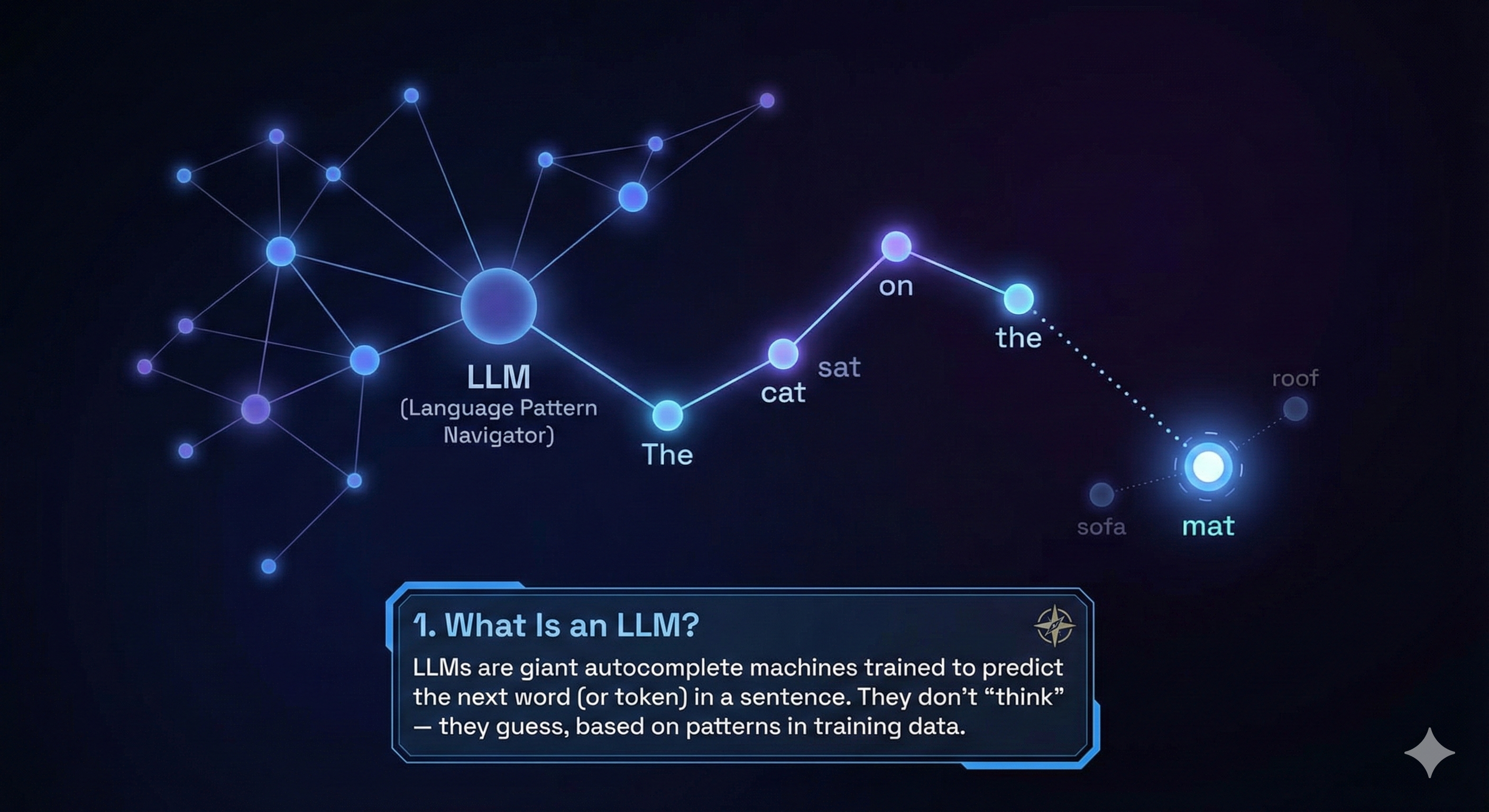

A large language model (LLM) is a kind of AI trained to generate text that sounds human. You give it some input (a question, prompt, or command), and it predicts what should come next—one token at a time.

LLMs are trained on huge amounts of text data: books, websites, code, transcripts, and more. Their goal is to learn patterns in language—grammar, facts, styles, reasoning steps—without being explicitly told what’s “correct.”

The secret to their power lies in sheer scale. Bigger models (more parameters) and bigger datasets (more examples) tend to perform better—though they also get harder to train and control. That’s why LLMs often need special training strategies to behave safely and helpfully.

Most modern LLMs are built using transformers Neural network architecture that processes input all at once using self-attention, enabling faster training on large datasets. , a powerful neural network architecture that replaced older approaches like RNNs Recurrent Neural Networks process data sequentially and are good for language or time-series, but struggle with long-term dependencies. and LSTMs A type of RNN with memory gates to solve the vanishing gradient problem and preserve long-term context. . Transformers made it possible to train on much larger datasets and learn long-range patterns in text.

Engineering Lens: Predictive text generation is just the surface—underneath is pattern recognition across billions of words.

Memory trick: An LLM doesn’t “think”—it predicts.