EMBEDDINGS

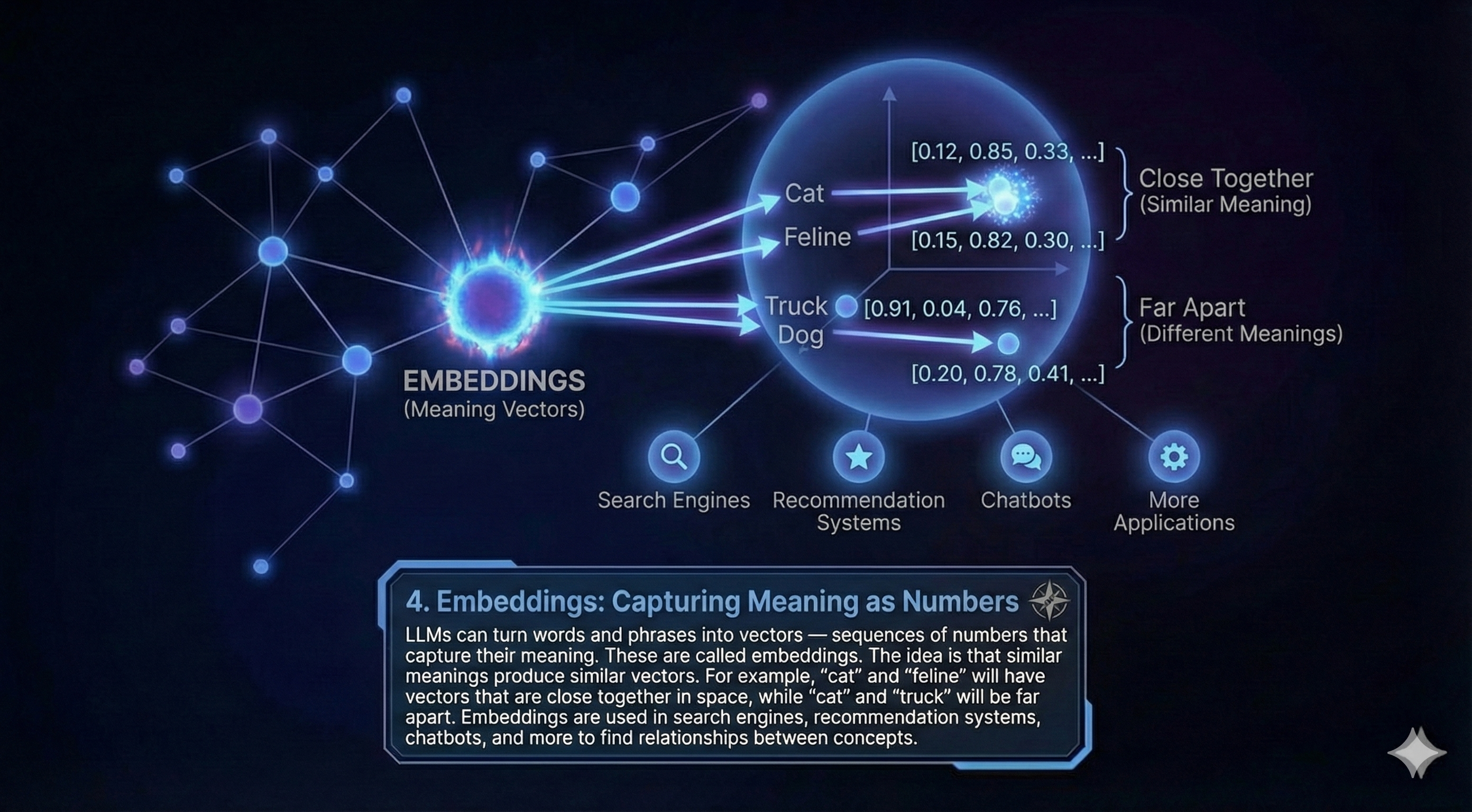

Embeddings are how words, phrases, or even entire documents get translated into numbers so machines can understand relationships between them.

In an LLM, each word is represented as a dense vector in high-dimensional space — like a location on a map with hundreds of dimensions. The closer two vectors are, the more semantically similar the concepts are.

These embeddings are what power search, recommendations, and understanding. They're the "language of meaning" inside the model.

Think of it like: A dictionary where every word has GPS coordinates instead of a definition.

"Cat" and "dog" are nearby. "Economics" and "pasta" are not.

What to remember

- Embeddings = mathematical meaning.

- They allow models to generalize, cluster, and reason.

- Used in vector databases, RAG systems, and similarity search.

- You can build your own embeddings using tools like OpenAI, HuggingFace, or Sentence Transformers.