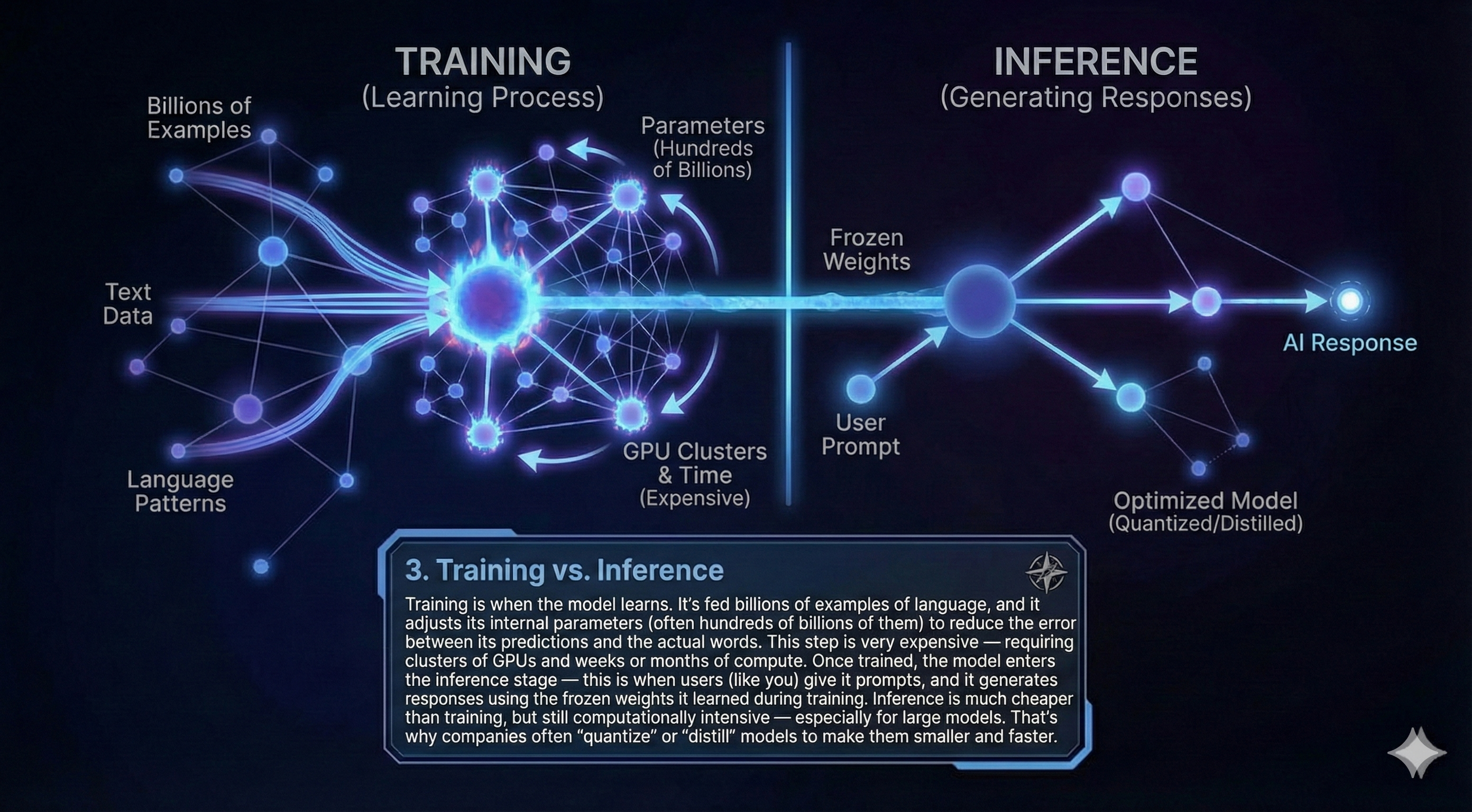

TRAINING vs INFERENCE

Once a model is built, it goes through two very different phases: training and inference.

Training is when the model learns — it's fed massive amounts of data and adjusts its internal weights through trial and error. This is compute-heavy and only happens once (or occasionally, with updates).

Inference is when the trained model is actually used. You type a prompt; the model predicts the next word, over and over, until it's done. Inference is faster and cheaper than training, but still resource-intensive for large LLMs.

Engineering lens: Training is like building the engine. Inference is turning the key and driving.

Memory trick: "Train once, infer many times."

What to remember

- Training: slow, expensive, massive data and compute.

- Inference: fast-ish, happens every time you use ChatGPT or similar tools.

- They run on different hardware setups: training uses massive clusters; inference often happens on the edge or cloud.